commit

d2705c3a33

24 changed files with 5967 additions and 0 deletions

BIN

.DS_Store

View File

+ 1

- 0

Docs/Docs.txt

View File

| @@ -0,0 +1 @@ | |||

| the docs file repo saves here | |||

BIN

Docs/ffpip.jpg

View File

+ 531

- 0

Inference_individually.py

View File

| @@ -0,0 +1,531 @@ | |||

| from pathlib import Path | |||

| import numpy as np | |||

| import matplotlib.pyplot as plt | |||

| from utils import sample_prompt | |||

| from collections import defaultdict | |||

| import torchvision.transforms as transforms | |||

| import torch | |||

| from torch import nn | |||

| import torch.nn.functional as F | |||

| from segment_anything.utils.transforms import ResizeLongestSide | |||

| import albumentations as A | |||

| from albumentations.pytorch import ToTensorV2 | |||

| from einops import rearrange | |||

| import random | |||

| from tqdm import tqdm | |||

| from time import sleep | |||

| from data import * | |||

| from time import time | |||

| from PIL import Image | |||

| from sklearn.model_selection import KFold | |||

| from shutil import copyfile | |||

| from args import get_arguments | |||

| # import wandb_handler | |||

| args = get_arguments() | |||

| def save_img(img, dir): | |||

| img = img.clone().cpu().numpy() + 100 | |||

| if len(img.shape) == 3: | |||

| img = rearrange(img, "c h w -> h w c") | |||

| img_min = np.amin(img, axis=(0, 1), keepdims=True) | |||

| img = img - img_min | |||

| img_max = np.amax(img, axis=(0, 1), keepdims=True) | |||

| img = (img / img_max * 255).astype(np.uint8) | |||

| img = Image.fromarray(img) | |||

| else: | |||

| img_min = img.min() | |||

| img = img - img_min | |||

| img_max = img.max() | |||

| if img_max != 0: | |||

| img = img / img_max * 255 | |||

| img = Image.fromarray(img).convert("L") | |||

| img.save(dir) | |||

| class loss_fn(torch.nn.Module): | |||

| def __init__(self, alpha=0.7, gamma=2.0, epsilon=1e-5): | |||

| super(loss_fn, self).__init__() | |||

| self.alpha = alpha | |||

| self.gamma = gamma | |||

| self.epsilon = epsilon | |||

| def dice_loss(self, logits, gt, eps=1): | |||

| probs = torch.sigmoid(logits) | |||

| probs = probs.view(-1) | |||

| gt = gt.view(-1) | |||

| intersection = (probs * gt).sum() | |||

| dice_coeff = (2.0 * intersection + eps) / (probs.sum() + gt.sum() + eps) | |||

| loss = 1 - dice_coeff | |||

| return loss | |||

| def focal_loss(self, logits, gt, gamma=2): | |||

| logits = logits.reshape(-1, 1) | |||

| gt = gt.reshape(-1, 1) | |||

| logits = torch.cat((1 - logits, logits), dim=1) | |||

| probs = torch.sigmoid(logits) | |||

| pt = probs.gather(1, gt.long()) | |||

| modulating_factor = (1 - pt) ** gamma | |||

| focal_loss = -modulating_factor * torch.log(pt + 1e-12) | |||

| loss = focal_loss.mean() | |||

| return loss # Store as a Python number to save memory | |||

| def forward(self, logits, target): | |||

| logits = logits.squeeze(1) | |||

| target = target.squeeze(1) | |||

| # Dice Loss | |||

| # prob = F.softmax(logits, dim=1)[:, 1, ...] | |||

| dice_loss = self.dice_loss(logits, target) | |||

| # Focal Loss | |||

| focal_loss = self.focal_loss(logits, target.squeeze(-1)) | |||

| alpha = 20.0 | |||

| # Combined Loss | |||

| combined_loss = alpha * focal_loss + dice_loss | |||

| return combined_loss | |||

| def img_enhance(img2, coef=0.2): | |||

| img_mean = np.mean(img2) | |||

| img_max = np.max(img2) | |||

| val = (img_max - img_mean) * coef + img_mean | |||

| img2[img2 < img_mean * 0.7] = img_mean * 0.7 | |||

| img2[img2 > val] = val | |||

| return img2 | |||

| def dice_coefficient(logits, gt): | |||

| eps=1 | |||

| binary_mask = logits>0 | |||

| intersection = (binary_mask * gt).sum(dim=(-2,-1)) | |||

| dice_scores = (2.0 * intersection + eps) / (binary_mask.sum(dim=(-2,-1)) + gt.sum(dim=(-2,-1)) + eps) | |||

| return dice_scores.mean() | |||

| def calculate_recall(pred, target): | |||

| smooth = 1 | |||

| batch_size = pred.shape[0] | |||

| recall_scores = [] | |||

| binary_mask = pred>0 | |||

| for i in range(batch_size): | |||

| true_positive = ((binary_mask[i] == 1) & (target[i] == 1)).sum().item() | |||

| false_negative = ((binary_mask[i] == 0) & (target[i] == 1)).sum().item() | |||

| recall = (true_positive + smooth) / ((true_positive + false_negative) + smooth) | |||

| recall_scores.append(recall) | |||

| return sum(recall_scores) / len(recall_scores) | |||

| def calculate_precision(pred, target): | |||

| smooth = 1 | |||

| batch_size = pred.shape[0] | |||

| precision_scores = [] | |||

| binary_mask = pred>0 | |||

| for i in range(batch_size): | |||

| true_positive = ((binary_mask[i] == 1) & (target[i] == 1)).sum().item() | |||

| false_positive = ((binary_mask[i] == 1) & (target[i] == 0)).sum().item() | |||

| precision = (true_positive + smooth) / ((true_positive + false_positive) + smooth) | |||

| precision_scores.append(precision) | |||

| return sum(precision_scores) / len(precision_scores) | |||

| def calculate_jaccard(pred, target): | |||

| smooth = 1 | |||

| batch_size = pred.shape[0] | |||

| jaccard_scores = [] | |||

| binary_mask = pred>0 | |||

| for i in range(batch_size): | |||

| true_positive = ((binary_mask[i] == 1) & (target[i] == 1)).sum().item() | |||

| false_positive = ((binary_mask[i] == 1) & (target[i] == 0)).sum().item() | |||

| false_negative = ((binary_mask[i] == 0) & (target[i] == 1)).sum().item() | |||

| jaccard = (true_positive + smooth) / (true_positive + false_positive + false_negative + smooth) | |||

| jaccard_scores.append(jaccard) | |||

| return sum(jaccard_scores) / len(jaccard_scores) | |||

| def calculate_specificity(pred, target): | |||

| smooth = 1 | |||

| batch_size = pred.shape[0] | |||

| specificity_scores = [] | |||

| binary_mask = pred>0 | |||

| for i in range(batch_size): | |||

| true_negative = ((binary_mask[i] == 0) & (target[i] == 0)).sum().item() | |||

| false_positive = ((binary_mask[i] == 1) & (target[i] == 0)).sum().item() | |||

| specificity = (true_negative + smooth) / (true_negative + false_positive + smooth) | |||

| specificity_scores.append(specificity) | |||

| return sum(specificity_scores) / len(specificity_scores) | |||

| def what_the_f(low_res_masks,label): | |||

| low_res_label = F.interpolate(label, low_res_masks.shape[-2:]) | |||

| dice = dice_coefficient( | |||

| low_res_masks, low_res_label | |||

| ) | |||

| recall=calculate_recall(low_res_masks, low_res_label) | |||

| precision =calculate_precision(low_res_masks, low_res_label) | |||

| jaccard = calculate_jaccard(low_res_masks, low_res_label) | |||

| return dice , precision , recall , jaccard | |||

| accumaltive_batch_size = 8 | |||

| batch_size = 1 | |||

| num_workers = 2 | |||

| slice_per_image = 1 | |||

| num_epochs = 40 | |||

| sample_size = 3660 | |||

| # sample_size = 43300 | |||

| # image_size=sam_model.image_encoder.img_size | |||

| image_size = 1024 | |||

| exp_id = 0 | |||

| found = 0 | |||

| layer_n = 4 | |||

| L = layer_n | |||

| a = np.full(L, layer_n) | |||

| params = {"M": 255, "a": a, "p": 0.35} | |||

| model_type = "vit_h" | |||

| checkpoint = "checkpoints/sam_vit_h_4b8939.pth" | |||

| device = "cuda:0" | |||

| from segment_anything import SamPredictor, sam_model_registry | |||

| ##################################main model####################################### | |||

| class panc_sam(nn.Module): | |||

| def __init__(self, *args, **kwargs) -> None: | |||

| super().__init__(*args, **kwargs) | |||

| #Promptless | |||

| sam = torch.load(args.pointbasemodel).sam | |||

| self.prompt_encoder = sam.prompt_encoder | |||

| self.mask_decoder = sam.mask_decoder | |||

| for param in self.prompt_encoder.parameters(): | |||

| param.requires_grad = False | |||

| for param in self.mask_decoder.parameters(): | |||

| param.requires_grad = False | |||

| #with Prompt | |||

| sam = torch.load( | |||

| args.promptprovider | |||

| ).sam | |||

| self.image_encoder = sam.image_encoder | |||

| self.prompt_encoder2 = sam.prompt_encoder | |||

| self.mask_decoder2 = sam.mask_decoder | |||

| for param in self.image_encoder.parameters(): | |||

| param.requires_grad = False | |||

| for param in self.prompt_encoder2.parameters(): | |||

| param.requires_grad = False | |||

| def forward(self, input_images,box=None): | |||

| # input_images = torch.stack([x["image"] for x in batched_input], dim=0) | |||

| # raise ValueError(input_images.shape) | |||

| with torch.no_grad(): | |||

| image_embeddings = self.image_encoder(input_images).detach() | |||

| outputs_prompt = [] | |||

| outputs = [] | |||

| for curr_embedding in image_embeddings: | |||

| with torch.no_grad(): | |||

| sparse_embeddings, dense_embeddings = self.prompt_encoder( | |||

| points=None, | |||

| boxes=None, | |||

| masks=None, | |||

| ) | |||

| low_res_masks, _ = self.mask_decoder( | |||

| image_embeddings=curr_embedding, | |||

| image_pe=self.prompt_encoder.get_dense_pe().detach(), | |||

| sparse_prompt_embeddings=sparse_embeddings.detach(), | |||

| dense_prompt_embeddings=dense_embeddings.detach(), | |||

| multimask_output=False, | |||

| ) | |||

| outputs_prompt.append(low_res_masks) | |||

| # raise ValueError(low_res_masks) | |||

| # points, point_labels = sample_prompt((low_res_masks > 0).float()) | |||

| points, point_labels = sample_prompt(low_res_masks) | |||

| points = points * 4 | |||

| points = (points, point_labels) | |||

| with torch.no_grad(): | |||

| sparse_embeddings, dense_embeddings = self.prompt_encoder2( | |||

| points=points, | |||

| boxes=None, | |||

| masks=None, | |||

| ) | |||

| low_res_masks, _ = self.mask_decoder2( | |||

| image_embeddings=curr_embedding, | |||

| image_pe=self.prompt_encoder2.get_dense_pe().detach(), | |||

| sparse_prompt_embeddings=sparse_embeddings.detach(), | |||

| dense_prompt_embeddings=dense_embeddings.detach(), | |||

| multimask_output=False, | |||

| ) | |||

| outputs.append(low_res_masks) | |||

| low_res_masks_promtp = torch.cat(outputs_prompt, dim=0) | |||

| low_res_masks = torch.cat(outputs, dim=0) | |||

| return low_res_masks, low_res_masks_promtp | |||

| ##################################end####################################### | |||

| ##################################Augmentation####################################### | |||

| augmentation = A.Compose( | |||

| [ | |||

| A.Rotate(limit=30, p=0.5), | |||

| A.RandomBrightnessContrast(brightness_limit=0.3, contrast_limit=0.3, p=1), | |||

| A.RandomResizedCrop(1024, 1024, scale=(0.9, 1.0), p=1), | |||

| A.HorizontalFlip(p=0.5), | |||

| A.CLAHE(clip_limit=2.0, tile_grid_size=(8, 8), p=0.5), | |||

| A.CoarseDropout( | |||

| max_holes=8, | |||

| max_height=16, | |||

| max_width=16, | |||

| min_height=8, | |||

| min_width=8, | |||

| fill_value=0, | |||

| p=0.5, | |||

| ), | |||

| A.RandomScale(scale_limit=0.3, p=0.5), | |||

| # A.GaussNoise(var_limit=(10.0, 50.0), p=0.5), | |||

| # A.GridDistortion(p=0.5), | |||

| ] | |||

| ) | |||

| ##################model load##################### | |||

| panc_sam_instance = panc_sam() | |||

| # for param in panc_sam_instance_point.parameters(): | |||

| # param.requires_grad = False | |||

| panc_sam_instance.to(device) | |||

| panc_sam_instance.train() | |||

| ##################load data####################### | |||

| test_dataset = PanDataset( | |||

| [args.test_dir], | |||

| [args.test_labels_dir], | |||

| [["NIH_PNG",1]], | |||

| image_size, | |||

| slice_per_image=slice_per_image, | |||

| train=False, | |||

| ) | |||

| test_loader = DataLoader( | |||

| test_dataset, | |||

| batch_size=batch_size, | |||

| collate_fn=test_dataset.collate_fn, | |||

| shuffle=False, | |||

| drop_last=False, | |||

| num_workers=num_workers, | |||

| ) | |||

| ##################end load data####################### | |||

| lr = 1e-4 | |||

| max_lr = 5e-5 | |||

| wd = 5e-4 | |||

| optimizer_main = torch.optim.Adam( | |||

| # parameters, | |||

| list(panc_sam_instance.mask_decoder2.parameters()), | |||

| lr=lr, | |||

| weight_decay=wd, | |||

| ) | |||

| scheduler_main = torch.optim.lr_scheduler.OneCycleLR( | |||

| optimizer_main, | |||

| max_lr=max_lr, | |||

| epochs=num_epochs, | |||

| steps_per_epoch=sample_size // (accumaltive_batch_size // batch_size), | |||

| ) | |||

| ##################################################### | |||

| from statistics import mean | |||

| from tqdm import tqdm | |||

| from torch.nn.functional import threshold, normalize | |||

| loss_function = loss_fn(alpha=0.5, gamma=2.0) | |||

| loss_function.to(device) | |||

| from time import time | |||

| import time as s_time | |||

| def process_model(main_model , data_loader, train=0, save_output=0): | |||

| epoch_losses = [] | |||

| results=[] | |||

| index = 0 | |||

| results = torch.zeros((2, 0, 256, 256)) | |||

| ############################# | |||

| total_dice = 0.0 | |||

| total_precision = 0.0 | |||

| total_recall =0.0 | |||

| total_jaccard = 0.0 | |||

| ############################# | |||

| num_samples = 0 | |||

| ############################# | |||

| total_dice_main =0.0 | |||

| total_precision_main = 0.0 | |||

| total_recall_main =0.0 | |||

| total_jaccard_main = 0.0 | |||

| counterb = 0 | |||

| for image, label in tqdm(data_loader, total=sample_size): | |||

| num_samples += 1 | |||

| counterb += 1 | |||

| index += 1 | |||

| image = image.to(device) | |||

| label = label.to(device).float() | |||

| ############################model and dice######################################## | |||

| box = torch.tensor([[200, 200, 750, 800]]).to(device) | |||

| low_res_masks_main,low_res_masks_prompt = main_model(image,box) | |||

| low_res_label = F.interpolate(label, low_res_masks_main.shape[-2:]) | |||

| dice_prompt, precisio_prompt , recall_prompt , jaccard_prompt = what_the_f(low_res_masks_prompt,low_res_label) | |||

| dice_main , precision_main , recall_main , jaccard_main = what_the_f(low_res_masks_main,low_res_label) | |||

| binary_mask = normalize(threshold(low_res_masks_main, 0.0,0)) | |||

| ##############prompt############### | |||

| total_dice += dice_prompt | |||

| total_precision += precisio_prompt | |||

| total_recall += recall_prompt | |||

| total_jaccard += jaccard_prompt | |||

| average_dice = total_dice / num_samples | |||

| average_precision = total_precision /num_samples | |||

| average_recall = total_recall /num_samples | |||

| average_jaccard = total_jaccard /num_samples | |||

| ##############main################## | |||

| total_dice_main+=dice_main | |||

| total_precision_main +=precision_main | |||

| total_recall_main +=recall_main | |||

| total_jaccard_main += jaccard_main | |||

| average_dice_main = total_dice_main / num_samples | |||

| average_precision_main = total_precision_main /num_samples | |||

| average_recall_main = total_recall_main /num_samples | |||

| average_jaccard_main = total_jaccard_main /num_samples | |||

| ################################### | |||

| # result = torch.cat( | |||

| # ( | |||

| # # low_res_masks_main[0].detach().cpu().reshape(1, 1, 256, 256), | |||

| # binary_mask[0].detach().cpu().reshape(1, 1, 256, 256), | |||

| # ), | |||

| # dim=0, | |||

| # ) | |||

| # results = torch.cat((results, result), dim=1) | |||

| if counterb == sample_size and train: | |||

| break | |||

| elif counterb == sample_size and not train: | |||

| break | |||

| return epoch_losses, results, average_dice,average_precision ,average_recall, average_jaccard,average_dice_main,average_precision_main,average_recall_main,average_jaccard_main | |||

| def train_model( test_loader, K_fold=False, N_fold=7, epoch_num_start=7): | |||

| print("Train model started.") | |||

| test_losses = [] | |||

| test_epochs = [] | |||

| dice = [] | |||

| dice_main = [] | |||

| dice_test = [] | |||

| dice_test_main =[] | |||

| results = [] | |||

| index = 0 | |||

| print("Testing:") | |||

| test_epoch_losses, epoch_results, average_dice_test,average_precision ,average_recall, average_jaccard,average_dice_test_main,average_precision_main,average_recall_main,average_jaccard_main = process_model( | |||

| panc_sam_instance,test_loader | |||

| ) | |||

| import torchvision.transforms.functional as TF | |||

| dice_test.append(average_dice_test) | |||

| dice_test_main.append(average_dice_test_main) | |||

| print("######################Prompt##########################") | |||

| print(f"Test Dice : {average_dice_test}") | |||

| print(f"Test presision : {average_precision}") | |||

| print(f"Test recall : {average_recall}") | |||

| print(f"Test jaccard : {average_jaccard}") | |||

| print("######################Main##########################") | |||

| print(f"Test Dice main : {average_dice_test_main}") | |||

| print(f"Test presision main : {average_precision_main}") | |||

| print(f"Test recall main : {average_recall_main}") | |||

| print(f"Test jaccard main : {average_jaccard_main}") | |||

| # results.append(epoch_results) | |||

| # del epoch_results | |||

| del average_dice_test | |||

| # return train_losses, results | |||

| train_model(test_loader) | |||

+ 51

- 0

README.md

View File

| @@ -0,0 +1,51 @@ | |||

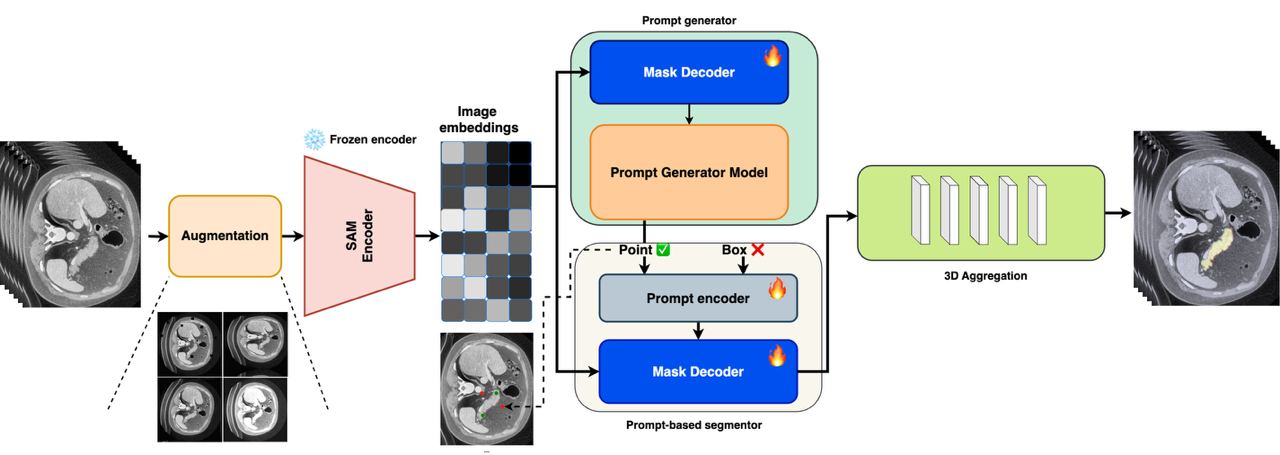

| # Pancreas Segmentation in CT Scan Images: Harnessing the Power of SAM | |||

| <p align="center"> | |||

| <img width="100%" src="Docs/ffpip.jpg"> | |||

| </p> | |||

| In this repositpry we describe the code impelmentation of the paper: "Pancreas Segmentation in CT Scan Images: Harnessing the Power of SAM" | |||

| ## Requirments | |||

| Frist step is install [requirements.txt](/requirements.txt) bakages in a conda eviroment. | |||

| Clone the [SAM](https://github.com/facebookresearch/segment-anything) repository. | |||

| Use the code below to download the suggested checkpoint of SAM: | |||

| ``` | |||

| !wget https://dl.fbaipublicfiles.com/segment_anything/sam_vit_h_4b8939.pth" | |||

| ``` | |||

| ## Dadaset and data loader description | |||

| For this segmentation report we used to populare pancreas datas: | |||

| - [NIH pancreas CT](https://wiki.cancerimagingarchive.net/display/Public/Pancreas-CT) | |||

| - [AbdomenCT-1K](https://github.com/JunMa11/AbdomenCT-1K) | |||

| After downloading and allocating datasets, we used a specefice data format (.npy) and for this step [save.py](/data_handler/save.py) provided. `save_dir` and `labels_save_dir` should modify. | |||

| As defualt `data.py` and `data_loader_group.py` are used in the desired codes. | |||

| Address can be modify in [args.py](args.py). | |||

| Due to anonymous code submitiom we haven't share our Model Weights. | |||

| ## Train model | |||

| For train model we use the files [fine_tune_good.py](fine_tune_good.py) and [fine_tune_good_unet.py](fine_tune_good_unet.py) and the command bellow is an example for start training with some costume settings. | |||

| ``` | |||

| python3 fine_tune_good_unet.py --sample_size 66 --accumulative_batch_size 4 --num_epochs 60 --num_workers 8 --batch_step_one 20 --batch_step_two 30 --lr 3e-4 --inference | |||

| ``` | |||

| ## Inference Model | |||

| To infrence both types of decoders just run the [double_decoder_infrence.py](double_decoder_infrence.py) | |||

| To get individually infrence SAM with or without prompt use [Inference_individually.py](Inference_individually) | |||

| ## 3D Aggregator | |||

| To run the `3D Aggregator` codes are available in [kernel](/kernel) folder and just run the [run.sh](kernel/run.sh) file. | |||

| becuase of opening so many files, the `u -limit` thresh hold should be increased using: | |||

| ``` | |||

| u -limit 15000 | |||

| ``` | |||

+ 662

- 0

SAM_with_prompt.py

View File

| @@ -0,0 +1,662 @@ | |||

| debug = 0 | |||

| from pathlib import Path | |||

| import numpy as np | |||

| import matplotlib.pyplot as plt | |||

| # import cv2 | |||

| from collections import defaultdict | |||

| import torchvision.transforms as transforms | |||

| import torch | |||

| from torch import nn | |||

| import torch.nn.functional as F | |||

| from segment_anything.utils.transforms import ResizeLongestSide | |||

| import albumentations as A | |||

| from albumentations.pytorch import ToTensorV2 | |||

| import numpy as np | |||

| from einops import rearrange | |||

| import random | |||

| from tqdm import tqdm | |||

| from time import sleep | |||

| from data import * | |||

| from time import time | |||

| from PIL import Image | |||

| from sklearn.model_selection import KFold | |||

| from shutil import copyfile | |||

| # import monai | |||

| from tqdm import tqdm | |||

| from utils import main_prompt,main_prompt_for_ground_true | |||

| from torch.autograd import Variable | |||

| from args import get_arguments | |||

| # import wandb_handler | |||

| args = get_arguments() | |||

| def save_img(img, dir): | |||

| img = img.clone().cpu().numpy() + 100 | |||

| if len(img.shape) == 3: | |||

| img = rearrange(img, "c h w -> h w c") | |||

| img_min = np.amin(img, axis=(0, 1), keepdims=True) | |||

| img = img - img_min | |||

| img_max = np.amax(img, axis=(0, 1), keepdims=True) | |||

| img = (img / img_max * 255).astype(np.uint8) | |||

| grey_img = Image.fromarray(img[:, :, 0]) | |||

| img = Image.fromarray(img) | |||

| else: | |||

| img_min = img.min() | |||

| img = img - img_min | |||

| img_max = img.max() | |||

| if img_max != 0: | |||

| img = img / img_max * 255 | |||

| img = Image.fromarray(img).convert("L") | |||

| img.save(dir) | |||

| class FocalLoss(nn.Module): | |||

| def __init__(self, gamma=2.0, alpha=0.25): | |||

| super(FocalLoss, self).__init__() | |||

| self.gamma = gamma | |||

| self.alpha = alpha | |||

| def dice_loss(self, logits, gt, eps=1): | |||

| # Convert logits to probabilities | |||

| # Flatten the tensors | |||

| # probs = probs.view(-1) | |||

| # gt = gt.view(-1) | |||

| probs = torch.sigmoid(logits) | |||

| # Compute Dice coefficient | |||

| intersection = (probs * gt).sum() | |||

| dice_coeff = (2.0 * intersection + eps) / (probs.sum() + gt.sum() + eps) | |||

| # Compute Dice Los[s | |||

| loss = 1 - dice_coeff | |||

| return loss | |||

| def focal_loss(self, pred, mask): | |||

| """ | |||

| pred: [B, 1, H, W] | |||

| mask: [B, 1, H, W] | |||

| """ | |||

| # pred=pred.reshape(-1,1) | |||

| # mask = mask.reshape(-1,1) | |||

| # assert pred.shape == mask.shape, "pred and mask should have the same shape." | |||

| p = torch.sigmoid(pred) | |||

| num_pos = torch.sum(mask) | |||

| num_neg = mask.numel() - num_pos | |||

| w_pos = (1 - p) ** self.gamma | |||

| w_neg = p**self.gamma | |||

| loss_pos = -self.alpha * mask * w_pos * torch.log(p + 1e-12) | |||

| loss_neg = -(1 - self.alpha) * (1 - mask) * w_neg * torch.log(1 - p + 1e-12) | |||

| loss = (torch.sum(loss_pos) + torch.sum(loss_neg)) / (num_pos + num_neg + 1e-12) | |||

| return loss | |||

| def forward(self, logits, target): | |||

| logits = logits.squeeze(1) | |||

| target = target.squeeze(1) | |||

| # Dice Loss | |||

| # prob = F.softmax(logits, dim=1)[:, 1, ...] | |||

| dice_loss = self.dice_loss(logits, target) | |||

| # Focal Loss | |||

| focal_loss = self.focal_loss(logits, target.squeeze(-1)) | |||

| alpha = 20.0 | |||

| # Combined Loss | |||

| combined_loss = alpha * focal_loss + dice_loss | |||

| return combined_loss | |||

| class loss_fn(torch.nn.Module): | |||

| def __init__(self, alpha=0.7, gamma=2.0, epsilon=1e-5): | |||

| super(loss_fn, self).__init__() | |||

| self.alpha = alpha | |||

| self.gamma = gamma | |||

| self.epsilon = epsilon | |||

| def tversky_loss(self, y_pred, y_true, alpha=0.8, beta=0.2, smooth=1e-2): | |||

| y_pred = torch.sigmoid(y_pred) | |||

| # raise ValueError(y_pred) | |||

| y_true_pos = torch.flatten(y_true) | |||

| y_pred_pos = torch.flatten(y_pred) | |||

| true_pos = torch.sum(y_true_pos * y_pred_pos) | |||

| false_neg = torch.sum(y_true_pos * (1 - y_pred_pos)) | |||

| false_pos = torch.sum((1 - y_true_pos) * y_pred_pos) | |||

| tversky_index = (true_pos + smooth) / ( | |||

| true_pos + alpha * false_neg + beta * false_pos + smooth | |||

| ) | |||

| return 1 - tversky_index | |||

| def focal_tversky(self, y_pred, y_true, gamma=0.75): | |||

| pt_1 = self.tversky_loss(y_pred, y_true) | |||

| return torch.pow((1 - pt_1), gamma) | |||

| def dice_loss(self, logits, gt, eps=1): | |||

| # Convert logits to probabilities | |||

| # Flatten the tensorsx | |||

| probs = torch.sigmoid(logits) | |||

| probs = probs.view(-1) | |||

| gt = gt.view(-1) | |||

| # Compute Dice coefficient | |||

| intersection = (probs * gt).sum() | |||

| dice_coeff = (2.0 * intersection + eps) / (probs.sum() + gt.sum() + eps) | |||

| # Compute Dice Los[s | |||

| loss = 1 - dice_coeff | |||

| return loss | |||

| def focal_loss(self, logits, gt, gamma=2): | |||

| logits = logits.reshape(-1, 1) | |||

| gt = gt.reshape(-1, 1) | |||

| logits = torch.cat((1 - logits, logits), dim=1) | |||

| probs = torch.sigmoid(logits) | |||

| pt = probs.gather(1, gt.long()) | |||

| modulating_factor = (1 - pt) ** gamma | |||

| # pt_false= pt<=0.5 | |||

| # modulating_factor[pt_false] *= 2 | |||

| focal_loss = -modulating_factor * torch.log(pt + 1e-12) | |||

| # Compute the mean focal loss | |||

| loss = focal_loss.mean() | |||

| return loss # Store as a Python number to save memory | |||

| def forward(self, logits, target): | |||

| logits = logits.squeeze(1) | |||

| target = target.squeeze(1) | |||

| # Dice Loss | |||

| # prob = F.softmax(logits, dim=1)[:, 1, ...] | |||

| dice_loss = self.dice_loss(logits, target) | |||

| tversky_loss = self.tversky_loss(logits, target) | |||

| # Focal Loss | |||

| focal_loss = self.focal_loss(logits, target.squeeze(-1)) | |||

| alpha = 20.0 | |||

| # Combined Loss | |||

| combined_loss = alpha * focal_loss + dice_loss | |||

| return combined_loss | |||

| def img_enhance(img2, coef=0.2): | |||

| img_mean = np.mean(img2) | |||

| img_max = np.max(img2) | |||

| val = (img_max - img_mean) * coef + img_mean | |||

| img2[img2 < img_mean * 0.7] = img_mean * 0.7 | |||

| img2[img2 > val] = val | |||

| return img2 | |||

| def dice_coefficient(pred, target): | |||

| smooth = 1 # Smoothing constant to avoid division by zero | |||

| dice = 0 | |||

| pred_index = pred | |||

| target_index = target | |||

| intersection = (pred_index * target_index).sum() | |||

| union = pred_index.sum() + target_index.sum() | |||

| dice += (2.0 * intersection + smooth) / (union + smooth) | |||

| return dice.item() | |||

| num_workers = 4 | |||

| slice_per_image = 1 | |||

| num_epochs = 80 | |||

| sample_size = 2000 | |||

| # image_size=sam_model.image_encoder.img_size | |||

| image_size = 1024 | |||

| exp_id = 0 | |||

| found = 0 | |||

| if debug: | |||

| user_input = "debug" | |||

| else: | |||

| user_input = input("Related changes: ") | |||

| while found == 0: | |||

| try: | |||

| os.makedirs(f"exps/{exp_id}-{user_input}") | |||

| found = 1 | |||

| except: | |||

| exp_id = exp_id + 1 | |||

| copyfile(os.path.realpath(__file__), f"exps/{exp_id}-{user_input}/code.py") | |||

| layer_n = 4 | |||

| L = layer_n | |||

| a = np.full(L, layer_n) | |||

| params = {"M": 255, "a": a, "p": 0.35} | |||

| device = "cuda:0" | |||

| from segment_anything import SamPredictor, sam_model_registry | |||

| # ////////////////// | |||

| class panc_sam(nn.Module): | |||

| def __init__(self, *args, **kwargs) -> None: | |||

| super().__init__(*args, **kwargs) | |||

| sam=sam_model_registry[args.model_type](args.checkpoint) | |||

| def forward(self, batched_input): | |||

| # with torch.no_grad(): | |||

| # raise ValueError(10) | |||

| input_images = torch.stack([x["image"] for x in batched_input], dim=0) | |||

| with torch.no_grad(): | |||

| image_embeddings = self.sam.image_encoder(input_images).detach() | |||

| outputs = [] | |||

| for image_record, curr_embedding in zip(batched_input, image_embeddings): | |||

| if "point_coords" in image_record: | |||

| points = (image_record["point_coords"].unsqueeze(0), image_record["point_labels"].unsqueeze(0)) | |||

| # raise ValueError(points) | |||

| else: | |||

| raise ValueError('what the f?') | |||

| points = None | |||

| # raise ValueError(image_record["point_coords"].shape) | |||

| with torch.no_grad(): | |||

| sparse_embeddings, dense_embeddings = self.sam.prompt_encoder( | |||

| points=points, | |||

| boxes=image_record.get("boxes", None), | |||

| masks=image_record.get("mask_inputs", None), | |||

| ) | |||

| low_res_masks, _ = self.sam.mask_decoder( | |||

| image_embeddings=curr_embedding.unsqueeze(0), | |||

| image_pe=self.sam.prompt_encoder.get_dense_pe().detach(), | |||

| sparse_prompt_embeddings=sparse_embeddings.detach(), | |||

| dense_prompt_embeddings=dense_embeddings.detach(), | |||

| multimask_output=False, | |||

| ) | |||

| outputs.append( | |||

| { | |||

| "low_res_logits": low_res_masks, | |||

| } | |||

| ) | |||

| low_res_masks = torch.stack([x["low_res_logits"] for x in outputs], dim=0) | |||

| return low_res_masks.squeeze(1) | |||

| # /////////////// | |||

| augmentation = A.Compose( | |||

| [ | |||

| A.Rotate(limit=90, p=0.5), | |||

| A.RandomBrightnessContrast(brightness_limit=0.3, contrast_limit=0.3, p=1), | |||

| A.RandomResizedCrop(1024, 1024, scale=(0.9, 1.0), p=1), | |||

| A.HorizontalFlip(p=0.5), | |||

| A.CLAHE(clip_limit=2.0, tile_grid_size=(8, 8), p=0.5), | |||

| A.CoarseDropout( | |||

| max_holes=8, | |||

| max_height=16, | |||

| max_width=16, | |||

| min_height=8, | |||

| min_width=8, | |||

| fill_value=0, | |||

| p=0.5, | |||

| ), | |||

| A.RandomScale(scale_limit=0.1, p=0.5), | |||

| ] | |||

| ) | |||

| panc_sam_instance = panc_sam() | |||

| panc_sam_instance.to(device) | |||

| panc_sam_instance.train() | |||

| train_dataset = PanDataset( | |||

| [args.train_dir], | |||

| [args.train_labels_dir], | |||

| [["NIH_PNG",1]], | |||

| image_size, | |||

| slice_per_image=slice_per_image, | |||

| train=True, | |||

| augmentation=augmentation, | |||

| ) | |||

| val_dataset = PanDataset( | |||

| [args.val_dir], | |||

| [args.train_dir], | |||

| [["NIH_PNG",1]], | |||

| image_size, | |||

| slice_per_image=slice_per_image, | |||

| train=False, | |||

| ) | |||

| train_loader = DataLoader( | |||

| train_dataset, | |||

| batch_size=args.batch_size, | |||

| collate_fn=train_dataset.collate_fn, | |||

| shuffle=True, | |||

| drop_last=False, | |||

| num_workers=num_workers, | |||

| ) | |||

| val_loader = DataLoader( | |||

| val_dataset, | |||

| batch_size=args.batch_size, | |||

| collate_fn=val_dataset.collate_fn, | |||

| shuffle=False, | |||

| drop_last=False, | |||

| num_workers=num_workers, | |||

| ) | |||

| # Set up the optimizer, hyperparameter tuning will improve performance here | |||

| lr = 1e-4 | |||

| max_lr = 5e-5 | |||

| wd = 5e-4 | |||

| optimizer = torch.optim.Adam( | |||

| # parameters, | |||

| list(panc_sam_instance.sam.mask_decoder.parameters()), | |||

| # list(panc_sam_instance.mask_decoder.parameters()), | |||

| lr=lr, | |||

| weight_decay=wd, | |||

| ) | |||

| scheduler = torch.optim.lr_scheduler.OneCycleLR( | |||

| optimizer, | |||

| max_lr=max_lr, | |||

| epochs=num_epochs, | |||

| steps_per_epoch=sample_size // (args.accumulative_batch_size // args.batch_size), | |||

| ) | |||

| from statistics import mean | |||

| from tqdm import tqdm | |||

| from torch.nn.functional import threshold, normalize | |||

| loss_function = loss_fn(alpha=0.5, gamma=2.0) | |||

| loss_function.to(device) | |||

| from time import time | |||

| import time as s_time | |||

| log_file = open(f"exps/{exp_id}-{user_input}/log.txt", "a") | |||

| def process_model(data_loader, train=0, save_output=0): | |||

| epoch_losses = [] | |||

| index = 0 | |||

| results = torch.zeros((2, 0, 256, 256)) | |||

| total_dice = 0.0 | |||

| num_samples = 0 | |||

| counterb = 0 | |||

| for image, label in tqdm(data_loader, total=sample_size): | |||

| s_time.sleep(0.6) | |||

| counterb += 1 | |||

| index += 1 | |||

| image = image.to(device) | |||

| label = label.to(device).float() | |||

| input_size = (1024, 1024) | |||

| box = torch.tensor([[200, 200, 750, 800]]).to(device) | |||

| points, point_labels = main_prompt_for_ground_true(label) | |||

| # raise ValueError(points) | |||

| batched_input = [] | |||

| for ibatch in range(args.batch_size): | |||

| batched_input.append( | |||

| { | |||

| "image": image[ibatch], | |||

| "point_coords": points[ibatch], | |||

| "point_labels": point_labels[ibatch], | |||

| "original_size": (1024, 1024) | |||

| # 'original_size': image1.shape[:2] | |||

| }, | |||

| ) | |||

| # raise ValueError(batched_input) | |||

| low_res_masks = panc_sam_instance(batched_input) | |||

| low_res_label = F.interpolate(label, low_res_masks.shape[-2:]) | |||

| binary_mask = normalize(threshold(low_res_masks, 0.0,0)) | |||

| loss = loss_function(low_res_masks, low_res_label) | |||

| loss /= (args.accumulative_batch_size / args.batch_size) | |||

| opened_binary_mask = torch.zeros_like(binary_mask).cpu() | |||

| for j, mask in enumerate(binary_mask[:, 0]): | |||

| numpy_mask = mask.detach().cpu().numpy().astype(np.uint8) | |||

| opened_binary_mask[j][0] = torch.from_numpy(numpy_mask) | |||

| dice = dice_coefficient( | |||

| opened_binary_mask.numpy(), low_res_label.cpu().detach().numpy() | |||

| ) | |||

| # print(dice) | |||

| total_dice += dice | |||

| num_samples += 1 | |||

| average_dice = total_dice / num_samples | |||

| log_file.write(str(average_dice) + "\n") | |||

| log_file.flush() | |||

| if train: | |||

| loss.backward() | |||

| if index % (args.accumulative_batch_size / args.batch_size) == 0: | |||

| # print(loss) | |||

| optimizer.step() | |||

| scheduler.step() | |||

| optimizer.zero_grad() | |||

| index = 0 | |||

| else: | |||

| result = torch.cat( | |||

| ( | |||

| low_res_masks[0].detach().cpu().reshape(1, 1, 256, 256), | |||

| opened_binary_mask[0].reshape(1, 1, 256, 256), | |||

| ), | |||

| dim=0, | |||

| ) | |||

| results = torch.cat((results, result), dim=1) | |||

| if index % (args.accumulative_batch_size / args.batch_size) == 0: | |||

| epoch_losses.append(loss.item()) | |||

| if counterb == sample_size and train: | |||

| break | |||

| elif counterb == sample_size // 5 and not train: | |||

| break | |||

| return epoch_losses, results, average_dice | |||

| def train_model(train_loader, val_loader, K_fold=False, N_fold=7, epoch_num_start=7): | |||

| print("Train model started.") | |||

| train_losses = [] | |||

| train_epochs = [] | |||

| val_losses = [] | |||

| val_epochs = [] | |||

| dice = [] | |||

| dice_val = [] | |||

| results = [] | |||

| if debug==0: | |||

| index = 0 | |||

| ## training with k-fold cross validation: | |||

| last_best_dice = 0 | |||

| for epoch in range(num_epochs): | |||

| if epoch > epoch_num_start: | |||

| kf = KFold(n_splits=N_fold, shuffle=True) | |||

| for i, (train_index, val_index) in enumerate(kf.split(train_loader)): | |||

| print( | |||

| f"=====================EPOCH: {epoch} fold: {i}=====================" | |||

| ) | |||

| print("Training:") | |||

| x_train, x_val = ( | |||

| train_loader[train_index], | |||

| train_loader[val_index], | |||

| ) | |||

| train_epoch_losses, epoch_results, average_dice = process_model( | |||

| x_train, train=1 | |||

| ) | |||

| dice.append(average_dice) | |||

| train_losses.append(train_epoch_losses) | |||

| if (average_dice) > 0.6: | |||

| print("validating:") | |||

| ( | |||

| val_epoch_losses, | |||

| epoch_results, | |||

| average_dice_val, | |||

| ) = process_model(x_val) | |||

| val_losses.append(val_epoch_losses) | |||

| for i in tqdm(range(len(epoch_results[0]))): | |||

| if not os.path.exists(f"ims/batch_{i}"): | |||

| os.mkdir(f"ims/batch_{i}") | |||

| save_img( | |||

| epoch_results[0, i].clone(), | |||

| f"ims/batch_{i}/prob_epoch_{epoch}.png", | |||

| ) | |||

| save_img( | |||

| epoch_results[1, i].clone(), | |||

| f"ims/batch_{i}/pred_epoch_{epoch}.png", | |||

| ) | |||

| train_mean_losses = [mean(x) for x in train_losses] | |||

| val_mean_losses = [mean(x) for x in val_losses] | |||

| np.save("train_losses.npy", train_mean_losses) | |||

| np.save("val_losses.npy", val_mean_losses) | |||

| print(f"Train Dice: {average_dice}") | |||

| print(f"Mean train loss: {mean(train_epoch_losses)}") | |||

| try: | |||

| dice_val.append(average_dice_val) | |||

| print(f"val Dice : {average_dice_val}") | |||

| print(f"Mean val loss: {mean(val_epoch_losses)}") | |||

| results.append(epoch_results) | |||

| val_epochs.append(epoch) | |||

| train_epochs.append(epoch) | |||

| plt.plot( | |||

| val_epochs, | |||

| val_mean_losses, | |||

| train_epochs, | |||

| train_mean_losses, | |||

| ) | |||

| if average_dice_val > last_best_dice: | |||

| torch.save( | |||

| panc_sam_instance, | |||

| f"exps/{exp_id}-{user_input}/sam_tuned_save.pth", | |||

| ) | |||

| last_best_dice = average_dice_val | |||

| del epoch_results | |||

| del average_dice_val | |||

| except: | |||

| train_epochs.append(epoch) | |||

| plt.plot(train_epochs, train_mean_losses) | |||

| print( | |||

| f"=================End of EPOCH: {epoch} Fold :{i}==================\n" | |||

| ) | |||

| plt.yscale("log") | |||

| plt.title("Mean epoch loss") | |||

| plt.xlabel("Epoch Number") | |||

| plt.ylabel("Loss") | |||

| plt.savefig("result") | |||

| else: | |||

| print(f"=====================EPOCH: {epoch}=====================") | |||

| last_best_dice = 0 | |||

| print("Training:") | |||

| train_epoch_losses, epoch_results, average_dice = process_model( | |||

| train_loader, train=1 | |||

| ) | |||

| dice.append(average_dice) | |||

| train_losses.append(train_epoch_losses) | |||

| if (average_dice) > 0.6: | |||

| print("validating:") | |||

| val_epoch_losses, epoch_results, average_dice_val = process_model( | |||

| val_loader | |||

| ) | |||

| val_losses.append(val_epoch_losses) | |||

| # for i in tqdm(range(len(epoch_results[0]))): | |||

| # if not os.path.exists(f"ims/batch_{i}"): | |||

| # os.mkdir(f"ims/batch_{i}") | |||

| # save_img( | |||

| # epoch_results[0, i].clone(), | |||

| # f"ims/batch_{i}/prob_epoch_{epoch}.png", | |||

| # ) | |||

| # save_img( | |||

| # epoch_results[1, i].clone(), | |||

| # f"ims/batch_{i}/pred_epoch_{epoch}.png", | |||

| # ) | |||

| train_mean_losses = [mean(x) for x in train_losses] | |||

| val_mean_losses = [mean(x) for x in val_losses] | |||

| np.save("train_losses.npy", train_mean_losses) | |||

| np.save("val_losses.npy", val_mean_losses) | |||

| print(f"Train Dice: {average_dice}") | |||

| print(f"Mean train loss: {mean(train_epoch_losses)}") | |||

| try: | |||

| dice_val.append(average_dice_val) | |||

| print(f"val Dice : {average_dice_val}") | |||

| print(f"Mean val loss: {mean(val_epoch_losses)}") | |||

| results.append(epoch_results) | |||

| val_epochs.append(epoch) | |||

| train_epochs.append(epoch) | |||

| plt.plot( | |||

| val_epochs, val_mean_losses, train_epochs, train_mean_losses | |||

| ) | |||

| if average_dice_val > last_best_dice: | |||

| torch.save( | |||

| panc_sam_instance, | |||

| f"exps/{exp_id}-{user_input}/sam_tuned_save.pth", | |||

| ) | |||

| last_best_dice = average_dice_val | |||

| del epoch_results | |||

| del average_dice_val | |||

| except: | |||

| train_epochs.append(epoch) | |||

| plt.plot(train_epochs, train_mean_losses) | |||

| print(f"=================End of EPOCH: {epoch}==================\n") | |||

| plt.yscale("log") | |||

| plt.title("Mean epoch loss") | |||

| plt.xlabel("Epoch Number") | |||

| plt.ylabel("Loss") | |||

| plt.savefig("result") | |||

| return train_losses, val_losses, results | |||

| train_losses, val_losses, results = train_model(train_loader, val_loader) | |||

| log_file.close() | |||

| # train and also test the model | |||

+ 456

- 0

SAM_without_prompt.py

View File

| @@ -0,0 +1,456 @@ | |||

| from pathlib import Path | |||

| import numpy as np | |||

| import matplotlib.pyplot as plt | |||

| # import cv2 | |||

| from collections import defaultdict | |||

| import torchvision.transforms as transforms | |||

| import torch | |||

| from torch import nn | |||

| import torch.nn.functional as F | |||

| from segment_anything.utils.transforms import ResizeLongestSide | |||

| import albumentations as A | |||

| from albumentations.pytorch import ToTensorV2 | |||

| import numpy as np | |||

| from einops import rearrange | |||

| import random | |||

| from tqdm import tqdm | |||

| from time import sleep | |||

| from data import * | |||

| from time import time | |||

| from PIL import Image | |||

| from sklearn.model_selection import KFold | |||

| from shutil import copyfile | |||

| from args import get_arguments | |||

| # import wandb_handler | |||

| args = get_arguments() | |||

| def save_img(img, dir): | |||

| img = img.clone().cpu().numpy() + 100 | |||

| if len(img.shape) == 3: | |||

| img = rearrange(img, "c h w -> h w c") | |||

| img_min = np.amin(img, axis=(0, 1), keepdims=True) | |||

| img = img - img_min | |||

| img_max = np.amax(img, axis=(0, 1), keepdims=True) | |||

| img = (img / img_max * 255).astype(np.uint8) | |||

| grey_img = Image.fromarray(img[:, :, 0]) | |||

| img = Image.fromarray(img) | |||

| else: | |||

| img_min = img.min() | |||

| img = img - img_min | |||

| img_max = img.max() | |||

| if img_max != 0: | |||

| img = img / img_max * 255 | |||

| img = Image.fromarray(img).convert("L") | |||

| img.save(dir) | |||

| class loss_fn(torch.nn.Module): | |||

| def __init__(self, alpha=0.7, gamma=2.0, epsilon=1e-5): | |||

| super(loss_fn, self).__init__() | |||

| self.alpha = alpha | |||

| self.gamma = gamma | |||

| self.epsilon = epsilon | |||

| def dice_loss(self, logits, gt, eps=1): | |||

| # Convert logits to probabilities | |||

| # Flatten the tensorsx | |||

| probs = torch.sigmoid(logits) | |||

| probs = probs.view(-1) | |||

| gt = gt.view(-1) | |||

| # Compute Dice coefficient | |||

| intersection = (probs * gt).sum() | |||

| dice_coeff = (2.0 * intersection + eps) / (probs.sum() + gt.sum() + eps) | |||

| # Compute Dice Los[s | |||

| loss = 1 - dice_coeff | |||

| return loss | |||

| def focal_loss(self, logits, gt, gamma=2): | |||

| logits = logits.reshape(-1, 1) | |||

| gt = gt.reshape(-1, 1) | |||

| logits = torch.cat((1 - logits, logits), dim=1) | |||

| probs = torch.sigmoid(logits) | |||

| pt = probs.gather(1, gt.long()) | |||

| modulating_factor = (1 - pt) ** gamma | |||

| # pt_false= pt<=0.5 | |||

| # modulating_factor[pt_false] *= 2 | |||

| focal_loss = -modulating_factor * torch.log(pt + 1e-12) | |||

| # Compute the mean focal loss | |||

| loss = focal_loss.mean() | |||

| return loss # Store as a Python number to save memory | |||

| def forward(self, logits, target): | |||

| logits = logits.squeeze(1) | |||

| target = target.squeeze(1) | |||

| # Dice Loss | |||

| # prob = F.softmax(logits, dim=1)[:, 1, ...] | |||

| dice_loss = self.dice_loss(logits, target) | |||

| # Focal Loss | |||

| focal_loss = self.focal_loss(logits, target.squeeze(-1)) | |||

| alpha = 20.0 | |||

| # Combined Loss | |||

| combined_loss = alpha * focal_loss + dice_loss | |||

| return combined_loss | |||

| def img_enhance(img2, coef=0.2): | |||

| img_mean = np.mean(img2) | |||

| img_max = np.max(img2) | |||

| val = (img_max - img_mean) * coef + img_mean | |||

| img2[img2 < img_mean * 0.7] = img_mean * 0.7 | |||

| img2[img2 > val] = val | |||

| return img2 | |||

| def dice_coefficient(logits, gt): | |||

| eps=1 | |||

| binary_mask = logits>0 | |||

| intersection = (binary_mask * gt).sum(dim=(-2,-1)) | |||

| dice_scores = (2.0 * intersection + eps) / (binary_mask.sum(dim=(-2,-1)) + gt.sum(dim=(-2,-1)) + eps) | |||

| return dice_scores.mean() | |||

| def what_the_f(low_res_masks,label): | |||

| low_res_label = F.interpolate(label, low_res_masks.shape[-2:]) | |||

| dice = dice_coefficient( | |||

| low_res_masks, low_res_label | |||

| ) | |||

| return dice | |||

| accumaltive_batch_size = 8 | |||

| batch_size = 1 | |||

| num_workers = 4 | |||

| slice_per_image = 1 | |||

| num_epochs = 80 | |||

| sample_size = 2000 | |||

| image_size = 1024 | |||

| exp_id = 0 | |||

| found=0 | |||

| debug = 0 | |||

| if debug: | |||

| user_input='debug' | |||

| else: | |||

| user_input = input("Related changes: ") | |||

| while found == 0: | |||

| try: | |||

| os.makedirs(f"exps/{exp_id}-{user_input}/") | |||

| found = 1 | |||

| except: | |||

| exp_id = exp_id + 1 | |||

| copyfile(os.path.realpath(__file__), f"exps/{exp_id}-{user_input}/code.py") | |||

| device = "cuda:1" | |||

| from segment_anything import SamPredictor, sam_model_registry | |||

| # ////////////////// | |||

| class panc_sam(nn.Module): | |||

| def __init__(self, *args, **kwargs) -> None: | |||

| super().__init__(*args, **kwargs) | |||

| sam=sam_model_registry[args.model_type](args.checkpoint) | |||

| # self.sam = torch.load('exps/sam_tuned_save.pth').sam | |||

| self.prompt_encoder = self.sam.prompt_encoder | |||

| for param in self.prompt_encoder.parameters(): | |||

| param.requires_grad = False | |||

| def forward(self, image ,box): | |||

| with torch.no_grad(): | |||

| image_embedding = self.sam.image_encoder(image).detach() | |||

| outputs_prompt = [] | |||

| for curr_embedding in image_embedding: | |||

| with torch.no_grad(): | |||

| sparse_embeddings, dense_embeddings = self.sam.prompt_encoder( | |||

| points=None, | |||

| boxes=None, | |||

| masks=None, | |||

| ) | |||

| low_res_masks, _ = self.sam.mask_decoder( | |||

| image_embeddings=curr_embedding, | |||

| image_pe=self.sam.prompt_encoder.get_dense_pe().detach(), | |||

| sparse_prompt_embeddings=sparse_embeddings.detach(), | |||

| dense_prompt_embeddings=dense_embeddings.detach(), | |||

| multimask_output=False, | |||

| ) | |||

| outputs_prompt.append(low_res_masks) | |||

| low_res_masks_promtp = torch.cat(outputs_prompt, dim=0) | |||

| # raise ValueError(low_res_masks_promtp) | |||

| return low_res_masks_promtp | |||

| # /////////////// | |||

| augmentation = A.Compose( | |||

| [ | |||

| A.Rotate(limit=90, p=0.5), | |||

| A.RandomBrightnessContrast(brightness_limit=0.3, contrast_limit=0.3, p=1), | |||

| A.RandomResizedCrop(1024, 1024, scale=(0.9, 1.0), p=1), | |||

| A.HorizontalFlip(p=0.5), | |||

| A.CLAHE(clip_limit=2.0, tile_grid_size=(8, 8), p=0.5), | |||

| A.CoarseDropout(max_holes=8, max_height=16, max_width=16, min_height=8, min_width=8, fill_value=0, p=0.5), | |||

| A.RandomScale(scale_limit=0.3, p=0.5), | |||

| A.GaussNoise(var_limit=(10.0, 50.0), p=0.5), | |||

| A.GridDistortion(p=0.5), | |||

| ] | |||

| ) | |||

| panc_sam_instance=panc_sam() | |||

| panc_sam_instance.to(device) | |||

| panc_sam_instance.train() | |||

| train_dataset = PanDataset( | |||

| [args.train_dir], | |||

| [args.train_labels_dir], | |||

| [["NIH_PNG",1]], | |||

| image_size, | |||

| slice_per_image=slice_per_image, | |||

| train=True, | |||

| augmentation=augmentation, | |||

| ) | |||

| val_dataset = PanDataset( | |||

| [args.val_dir], | |||

| [args.val_labels_dir], | |||

| [["NIH_PNG",1]], | |||

| image_size, | |||

| slice_per_image=slice_per_image, | |||

| train=False, | |||

| ) | |||

| train_loader = DataLoader( | |||

| train_dataset, | |||

| batch_size=batch_size, | |||

| collate_fn=train_dataset.collate_fn, | |||

| shuffle=True, | |||

| drop_last=False, | |||

| num_workers=num_workers, | |||

| ) | |||

| val_loader = DataLoader( | |||

| val_dataset, | |||

| batch_size=batch_size, | |||

| collate_fn=val_dataset.collate_fn, | |||

| shuffle=False, | |||

| drop_last=False, | |||

| num_workers=num_workers, | |||

| ) | |||

| # Set up the optimizer, hyperparameter tuning will improve performance here | |||

| lr = 1e-4 | |||

| max_lr = 5e-5 | |||

| wd = 5e-4 | |||

| optimizer = torch.optim.Adam( | |||

| # parameters, | |||

| list(panc_sam_instance.sam.mask_decoder.parameters()), | |||

| lr=lr, weight_decay=wd | |||

| ) | |||

| scheduler = torch.optim.lr_scheduler.OneCycleLR( | |||

| optimizer, | |||

| max_lr=max_lr, | |||

| epochs=num_epochs, | |||

| steps_per_epoch=sample_size // (accumaltive_batch_size // batch_size), | |||

| ) | |||

| from statistics import mean | |||

| from tqdm import tqdm | |||

| from torch.nn.functional import threshold, normalize | |||

| loss_function = loss_fn(alpha=0.5, gamma=2.0) | |||

| loss_function.to(device) | |||

| from time import time | |||

| import time as s_time | |||

| log_file = open(f"exps/{exp_id}-{user_input}/log.txt", "a") | |||

| def process_model(data_loader, train=0, save_output=0): | |||

| epoch_losses = [] | |||

| index = 0 | |||

| results = torch.zeros((2, 0, 256, 256)) | |||

| total_dice = 0.0 | |||

| num_samples = 0 | |||

| counterb = 0 | |||

| for image, label in tqdm(data_loader, total=sample_size): | |||

| counterb += 1 | |||

| num_samples += 1 | |||

| index += 1 | |||

| image = image.to(device) | |||

| label = label.to(device).float() | |||

| input_size = (1024, 1024) | |||

| box = torch.tensor([[200, 200, 750, 800]]).to(device) | |||

| low_res_masks = panc_sam_instance(image,box) | |||

| low_res_label = F.interpolate(label, low_res_masks.shape[-2:]) | |||

| dice = what_the_f(low_res_masks,low_res_label) | |||

| binary_mask = normalize(threshold(low_res_masks, 0.0, 0)) | |||

| total_dice += dice | |||

| average_dice = total_dice / num_samples | |||

| log_file.write(str(average_dice) + "\n") | |||

| log_file.flush() | |||

| loss = loss_function.forward(low_res_masks, low_res_label) | |||

| loss /= accumaltive_batch_size / batch_size | |||

| if train: | |||

| loss.backward() | |||

| if index % (accumaltive_batch_size / batch_size) == 0: | |||

| # print(loss) | |||

| optimizer.step() | |||

| scheduler.step() | |||

| optimizer.zero_grad() | |||

| index = 0 | |||

| else: | |||

| pass | |||

| if index % (accumaltive_batch_size / batch_size) == 0: | |||

| epoch_losses.append(loss.item()) | |||

| if counterb == sample_size and train: | |||

| break | |||

| elif counterb == sample_size / 10 and not train: | |||

| break | |||

| return epoch_losses, results, average_dice | |||

| def train_model(train_loader, val_loader, K_fold=False, N_fold=7, epoch_num_start=7): | |||

| print("Train model started.") | |||

| train_losses = [] | |||

| train_epochs = [] | |||

| val_losses = [] | |||

| val_epochs = [] | |||

| dice = [] | |||

| dice_val = [] | |||

| results = [] | |||

| index = 0 | |||

| last_best_dice = 0 | |||

| for epoch in range(num_epochs): | |||

| print(f"=====================EPOCH: {epoch + 1}=====================") | |||

| log_file.write( | |||

| f"=====================EPOCH: {epoch + 1}===================\n" | |||

| ) | |||

| print("Training:") | |||

| train_epoch_losses, epoch_results, average_dice = process_model( | |||

| train_loader, train=1 | |||

| ) | |||

| dice.append(average_dice) | |||

| train_losses.append(train_epoch_losses) | |||

| if (average_dice) > 0.5: | |||

| print("valing:") | |||

| val_epoch_losses, epoch_results, average_dice_val = process_model( | |||

| val_loader | |||

| ) | |||

| val_losses.append(val_epoch_losses) | |||

| for i in tqdm(range(len(epoch_results[0]))): | |||

| if not os.path.exists(f"ims/batch_{i}"): | |||

| os.mkdir(f"ims/batch_{i}") | |||

| save_img(epoch_results[0, i].clone(), f"ims/batch_{i}/prob_epoch_{epoch}.png") | |||

| save_img(epoch_results[1, i].clone(), f"ims/batch_{i}/pred_epoch_{epoch}.png") | |||

| train_mean_losses = [mean(x) for x in train_losses] | |||

| # raise ValueError(average_dice) | |||

| val_mean_losses = [mean(x) for x in val_losses] | |||

| np.save("train_losses.npy", train_mean_losses) | |||

| np.save("val_losses.npy", val_mean_losses) | |||

| print(f"Train Dice: {average_dice}") | |||

| print(f"Mean train loss: {mean(train_epoch_losses)}") | |||

| try: | |||

| dice_val.append(average_dice_val) | |||

| print(f"val Dice : {average_dice_val}") | |||

| print(f"Mean val loss: {mean(val_epoch_losses)}") | |||

| results.append(epoch_results) | |||

| val_epochs.append(epoch) | |||

| train_epochs.append(epoch) | |||

| plt.plot(val_epochs, val_mean_losses, train_epochs, train_mean_losses) | |||

| print(last_best_dice) | |||

| log_file.write(f'bestwieght:{last_best_dice}') | |||

| if average_dice_val > last_best_dice: | |||

| torch.save(panc_sam_instance, f"exps/{exp_id}-{user_input}/sam_tuned_save.pth") | |||

| last_best_dice = average_dice_val | |||

| del epoch_results | |||

| del average_dice_val | |||

| except: | |||

| train_epochs.append(epoch) | |||

| plt.plot(train_epochs, train_mean_losses) | |||

| print(f"=================End of EPOCH: {epoch}==================\n") | |||

| plt.yscale("log") | |||

| plt.title("Mean epoch loss") | |||

| plt.xlabel("Epoch Number") | |||

| plt.ylabel("Loss") | |||

| plt.savefig("result") | |||

| return train_losses, val_losses, results | |||

| train_losses, val_losses, results = train_model(train_loader, val_loader) | |||

| log_file.close() | |||

| # train and also val the model | |||

+ 36

- 0

args.py

View File

| @@ -0,0 +1,36 @@ | |||

| import argparse | |||

| def get_arguments(): | |||

| parser = argparse.ArgumentParser(description="Your program's description here") | |||

| parser.add_argument('--debug', action='store_true', help='Enable debug mode') | |||

| parser.add_argument('--accumulative_batch_size', type=int, default=2, help='Accumulative batch size') | |||

| parser.add_argument('--batch_size', type=int, default=1, help='Batch size') | |||

| parser.add_argument('--num_workers', type=int, default=1, help='Number of workers') | |||

| parser.add_argument('--slice_per_image', type=int, default=1, help='Slices per image') | |||

| parser.add_argument('--num_epochs', type=int, default=40, help='Number of epochs') | |||

| parser.add_argument('--sample_size', type=int, default=4, help='Sample size') | |||

| parser.add_argument('--image_size', type=int, default=1024, help='Image size') | |||

| parser.add_argument('--run_name', type=str, default='debug', help='The name of the run') | |||

| parser.add_argument('--lr', type=float, default=1e-3, help='Learning Rate') | |||

| parser.add_argument('--batch_step_one', type=int, default=15, help='Batch one') | |||

| parser.add_argument('--batch_step_two', type=int, default=25, help='Batch two') | |||

| parser.add_argument('--conv_model', type=str, default=None, help='Path to convolution model') | |||

| parser.add_argument('--custom_bias', type=float, default=0, help='Learning Rate') | |||

| parser.add_argument("--inference", action="store_true", help="Set for inference") | |||

| ######################################################################################## | |||

| parser.add_argument(" --promptprovider" , type=str , help="path weight of prompt provider") | |||

| parser.add_argument(" --pointbasemodel" , type=str , help="path weight of prompt provider") | |||

| parser.add_argument("--train_dir",type=str, help="Path to the training data") | |||

| parser.add_argument("--test_dir",type=str, help="Path to the test data") | |||

| parser.add_argument("--test_labels_dir",type=str, help="Path to the test data") | |||

| parser.add_argument("--train_labels_dir",type=str, help="Path to the test data") | |||

| parser.add_argument("--images_dir",type=str, help="Path to the test data") | |||

| parser.add_argument("--checkpoint",type=str, help="Path to the test data") | |||

| parser.add_argument("--model_type",type=str, help="Path to the test data",default="vit_h") | |||

| return parser.parse_args() | |||

+ 291

- 0

data.py

View File

| @@ -0,0 +1,291 @@ | |||

| import matplotlib.pyplot as plt | |||

| import os | |||

| import numpy as np | |||

| import random | |||

| from segment_anything.utils.transforms import ResizeLongestSide | |||

| from einops import rearrange | |||

| import torch | |||

| from segment_anything import SamPredictor, sam_model_registry | |||

| from torch.utils.data import DataLoader | |||

| from time import time | |||

| import torch.nn.functional as F | |||

| import cv2 | |||

| from PIL import Image | |||

| import cv2 | |||

| from kernel.pre_processer import PreProcessing | |||

| def apply_median_filter(input_matrix, kernel_size=5, sigma=0): | |||

| # Apply the Gaussian filter | |||

| filtered_matrix = cv2.medianBlur(input_matrix.astype(np.uint8), kernel_size) | |||

| return filtered_matrix.astype(np.float32) | |||

| def apply_guassain_filter(input_matrix, kernel_size=(7, 7), sigma=0): | |||

| smoothed_matrix = cv2.blur(input_matrix, kernel_size) | |||

| return smoothed_matrix.astype(np.float32) | |||

| def img_enhance(img2, over_coef=0.8, under_coef=0.7): | |||

| img2 = apply_median_filter(img2) | |||

| img_blure = apply_guassain_filter(img2) | |||

| img2 = img2 - 0.8 * img_blure | |||

| img_mean = np.mean(img2, axis=(1, 2)) | |||

| img_max = np.amax(img2, axis=(1, 2)) | |||

| val = (img_max - img_mean) * over_coef + img_mean | |||

| img2 = (img2 < img_mean * under_coef).astype(np.float32) * img_mean * under_coef + ( | |||

| (img2 >= img_mean * under_coef).astype(np.float32) | |||

| ) * img2 | |||

| img2 = (img2 <= val).astype(np.float32) * img2 + (img2 > val).astype( | |||

| np.float32 | |||

| ) * val | |||

| return img2 | |||

| def normalize_and_pad(x, img_size): | |||

| """Normalize pixel values and pad to a square input.""" | |||

| pixel_mean = torch.tensor([[[[123.675]], [[116.28]], [[103.53]]]]) | |||

| pixel_std = torch.tensor([[[[58.395]], [[57.12]], [[57.375]]]]) | |||

| # Normalize colors | |||

| x = (x - pixel_mean) / pixel_std | |||

| # Pad | |||

| h, w = x.shape[-2:] | |||

| padh = img_size - h | |||

| padw = img_size - w | |||

| x = F.pad(x, (0, padw, 0, padh)) | |||

| return x | |||

| def preprocess(img_enhanced, img_enhance_times=1, over_coef=0.4, under_coef=0.5): | |||

| # img_enhanced = img_enhanced+0.1 | |||

| img_enhanced -= torch.amin(img_enhanced, dim=(1, 2), keepdim=True) | |||

| img_max = torch.amax(img_enhanced, axis=(1, 2), keepdims=True) | |||

| img_max[img_max == 0] = 1 | |||

| img_enhanced = img_enhanced / img_max | |||

| # raise ValueError(img_max) | |||

| img_enhanced = img_enhanced.unsqueeze(1) | |||

| img_enhanced = PreProcessing.CLAHE(img_enhanced, clip_limit=9.0, grid_size=(4, 4)) | |||

| img_enhanced = img_enhanced[0] | |||

| # for i in range(img_enhance_times): | |||

| # img_enhanced=img_enhance(img_enhanced.astype(np.float32), over_coef=over_coef,under_coef=under_coef) | |||

| img_enhanced -= torch.amin(img_enhanced, dim=(1, 2), keepdim=True) | |||

| larg_imag = ( | |||

| img_enhanced / torch.amax(img_enhanced, axis=(1, 2), keepdims=True) * 255 | |||

| ).type(torch.uint8) | |||

| return larg_imag | |||

| def prepare(larg_imag, target_image_size): | |||

| # larg_imag = 255 - larg_imag | |||

| larg_imag = rearrange(larg_imag, "S H W -> S 1 H W") | |||

| larg_imag = torch.tensor( | |||

| np.concatenate([larg_imag, larg_imag, larg_imag], axis=1) | |||

| ).float() | |||

| transform = ResizeLongestSide(target_image_size) | |||

| larg_imag = transform.apply_image_torch(larg_imag) | |||

| larg_imag = normalize_and_pad(larg_imag, target_image_size) | |||

| return larg_imag | |||

| def process_single_image(image_path, target_image_size): | |||

| # Load the image | |||

| if image_path.endswith(".png") or image_path.endswith(".jpg"): | |||

| data = cv2.imread(image_path, cv2.IMREAD_GRAYSCALE).squeeze() | |||

| else: | |||

| data = np.load(image_path) | |||

| x = rearrange(data, "H W -> 1 H W") | |||

| x = torch.tensor(x) | |||

| # Apply preprocessing | |||

| x = preprocess(x) | |||

| x = prepare(x, target_image_size) | |||

| return x | |||

| class PanDataset: | |||

| def __init__( | |||

| self, | |||

| images_dirs, | |||

| labels_dirs, | |||

| datasets, | |||

| target_image_size, | |||

| slice_per_image, | |||

| train=True, | |||

| ratio=0.9, | |||

| augmentation=None, | |||

| ): | |||

| self.data_set_names = [] | |||

| self.labels_path = [] | |||

| self.images_path = [] | |||

| for labels_dir, images_dir, dataset_name in zip( | |||

| labels_dirs, images_dirs, datasets | |||

| ): | |||

| if train == True: | |||

| self.data_set_names.extend( | |||

| sorted([dataset_name[0] for _ in os.listdir(labels_dir)[:int(len(os.listdir(labels_dir)) * ratio)]]) | |||

| ) | |||

| self.labels_path.extend( | |||

| sorted([os.path.join(labels_dir, item) for item in os.listdir(labels_dir)[:int(len(os.listdir(labels_dir)) * ratio)]]) | |||

| ) | |||

| self.images_path.extend( | |||

| sorted([os.path.join(images_dir, item) for item in os.listdir(images_dir)[:int(len(os.listdir(images_dir)) * ratio)]]) | |||

| ) | |||

| else: | |||

| self.data_set_names.extend( | |||

| sorted([dataset_name[0] for _ in os.listdir(labels_dir)[int(len(os.listdir(labels_dir)) * ratio):]]) | |||

| ) | |||

| self.labels_path.extend( | |||

| sorted([os.path.join(labels_dir, item) for item in os.listdir(labels_dir)[int(len(os.listdir(labels_dir)) * ratio):]]) | |||

| ) | |||

| self.images_path.extend( | |||

| sorted([os.path.join(images_dir, item) for item in os.listdir(images_dir)[int(len(os.listdir(images_dir)) * ratio):]]) | |||

| ) | |||

| self.target_image_size = target_image_size | |||

| self.datasets = datasets | |||

| self.slice_per_image = slice_per_image | |||

| self.augmentation = augmentation | |||

| def __getitem__(self, idx): | |||

| data = np.load(self.images_path[idx]) | |||

| raw_data = data | |||

| labels = np.load(self.labels_path[idx]) | |||

| if self.data_set_names[idx] == "NIH_PNG": | |||

| x = rearrange(data.T, "H W -> 1 H W") | |||

| y = rearrange(labels.T, "H W -> 1 H W") | |||

| y = (y == 1).astype(np.uint8) | |||

| elif self.data_set_names[idx] == "Abdment1kPNG": | |||

| x = rearrange(data, "H W -> 1 H W") | |||

| y = rearrange(labels, "H W -> 1 H W") | |||

| y = (y == 4).astype(np.uint8) | |||

| else: | |||

| raise ValueError("Incorect dataset name") | |||

| x = torch.tensor(x) | |||

| y = torch.tensor(y) | |||

| x = preprocess(x) | |||

| x, y = self.apply_augmentation(x.numpy(), y.numpy()) | |||

| y = F.interpolate(y.unsqueeze(1), size=self.target_image_size) | |||

| x = prepare(x, self.target_image_size) | |||

| return x, y ,raw_data | |||

| def collate_fn(self, data): | |||

| images, labels , raw_data = zip(*data) | |||

| images = torch.cat(images, dim=0) | |||

| labels = torch.cat(labels, dim=0) | |||

| # raw_data = torch.cat(raw_data, dim=0) | |||

| return images, labels , raw_data | |||

| def __len__(self): | |||

| return len(self.images_path) | |||

| def apply_augmentation(self, image, label): | |||

| if self.augmentation: | |||

| # If image and label are tensors, convert them to numpy arrays | |||

| # raise ValueError(label.shape) | |||

| augmented = self.augmentation(image=image[0], mask=label[0]) | |||

| image = torch.tensor(augmented["image"]) | |||

| label = torch.tensor(augmented["mask"]) | |||

| # You might want to convert back to torch.Tensor after the transformation | |||

| image = image.unsqueeze(0) | |||

| label = label.unsqueeze(0) | |||

| else: | |||

| image = torch.Tensor(image) | |||

| label = torch.Tensor(label) | |||

| return image, label | |||

| import albumentations as A | |||

| if __name__ == "__main__": | |||

| model_type = "vit_h" | |||

| batch_size = 4 | |||

| num_workers = 4 | |||

| slice_per_image = 1 | |||

| image_size = 1024 | |||

| checkpoint = "checkpoints/sam_vit_h_4b8939.pth" | |||

| panc_sam_instance = sam_model_registry[model_type](checkpoint=checkpoint) | |||

| augmentation = A.Compose( | |||

| [ | |||

| A.Rotate(limit=10, p=0.5), | |||

| A.RandomBrightnessContrast(brightness_limit=0.2, contrast_limit=0.2, p=1), | |||

| A.RandomResizedCrop(1024, 1024, scale=(0.9, 1.0), p=1), | |||

| ] | |||

| ) | |||

| train_dataset = PanDataset( | |||

| "bath image", | |||

| "bath label", | |||

| image_size, | |||

| slice_per_image=slice_per_image, | |||

| train=True, | |||

| augmentation=None, | |||

| ) | |||

| train_loader = DataLoader( | |||

| train_dataset, | |||

| batch_size=batch_size, | |||

| collate_fn=train_dataset.collate_fn, | |||

| shuffle=True, | |||

| drop_last=False, | |||

| num_workers=num_workers, | |||

| ) | |||

| # x, y = dataset[7] | |||

| # print(x.shape, y.shape) | |||

| now = time() | |||

| for images, labels in train_loader: | |||

| # pass | |||

| image_numpy = images[0].permute(1, 2, 0).cpu().numpy() | |||

| # Ensure that the values are in the correct range [0, 255] and cast to uint8 | |||

| image_numpy = (image_numpy * 255).astype(np.uint8) | |||

| # Save the image using OpenCV | |||

| cv2.imwrite("image2.png", image_numpy[:, :, 1]) | |||

| break | |||

| # print((time() - now) / batch_size / slice_per_image) | |||

+ 166

- 0

data_handler/save.py

View File

| @@ -0,0 +1,166 @@ | |||

| import matplotlib.pyplot as plt | |||

| import os | |||

| import numpy as np | |||

| import random | |||

| from segment_anything.utils.transforms import ResizeLongestSide | |||

| from einops import rearrange | |||

| import torch | |||

| import os | |||

| from segment_anything import SamPredictor, sam_model_registry | |||

| from torch.utils.data import DataLoader | |||

| from time import time | |||

| import torch.nn.functional as F | |||

| import cv2 | |||

| # def preprocess(image_paths, label_paths): | |||

| # preprocessed_images = [] | |||

| # preprocessed_labels = [] | |||

| # for image_path, label_path in zip(image_paths, label_paths): | |||

| # # Load image and label from paths | |||

| # image = plt.imread(image_path) | |||

| # label = plt.imread(label_path) | |||

| # # Perform preprocessing steps here | |||

| # # ... | |||

| # preprocessed_images.append(image) | |||

| # preprocessed_labels.append(label) | |||

| # return preprocessed_images, preprocessed_labels | |||

| class PanDataset: | |||

| def __init__(self, images_dir, labels_dir, slice_per_image, train=True,**kwargs): | |||

| #for Abdonomial | |||

| self.images_path = sorted([os.path.join(images_dir, item[:item.rindex('.')] + '_0000.npz') for item in os.listdir(labels_dir) if item.endswith('.npz') and not item.startswith('.')]) | |||

| self.labels_path = sorted([os.path.join(labels_dir, item) for item in os.listdir(labels_dir) if item.endswith('.npz') and not item.startswith('.')]) | |||

| #for NIH | |||

| # self.images_path = sorted([os.path.join(images_dir, item) for item in os.listdir(labels_dir) if item.endswith('.npy')]) | |||

| # self.labels_path = sorted([os.path.join(labels_dir, item) for item in os.listdir(labels_dir) if item.endswith('.npy')]) | |||

| N = len(self.images_path) | |||

| n = int(N * 0.8) | |||

| self.train = train | |||

| self.slice_per_image = slice_per_image | |||

| if train: | |||

| self.labels_path = self.labels_path[:n] | |||

| self.images_path = self.images_path[:n] | |||

| else: | |||

| self.labels_path = self.labels_path[n:] | |||

| self.images_path = self.images_path[n:] | |||

| self.args=kwargs['args'] | |||

| def __getitem__(self, idx): | |||

| now = time() | |||

| # for abdoment | |||

| data = np.load(self.images_path[idx])['arr_0'] | |||

| labels = np.load(self.labels_path[idx])['arr_0'] | |||

| #for nih | |||

| # data = np.load(self.images_path[idx]) | |||

| # labels = np.load(self.labels_path[idx]) | |||

| H, W, C = data.shape | |||

| positive_slices = np.any(labels == 1, axis=(0, 1)) | |||

| # print("Load from file time = ", time() - now) | |||

| now = time() | |||

| # Find the first and last positive slices | |||

| first_positive_slice = np.argmax(positive_slices) | |||

| last_positive_slice = labels.shape[2] - np.argmax(positive_slices[::-1]) - 1 | |||

| dist=last_positive_slice-first_positive_slice | |||

| if self.train: | |||

| save_dir = self.args.images_dir # data address here | |||

| labels_save_dir = self.args.labels_dir # label address here | |||

| else : | |||

| save_dir = self.args.test_images_dir # data address here | |||

| labels_save_dir = self.args.test_labels_dir # label address here | |||

| j=0 | |||

| for j in range(1): | |||

| slice = range(len(labels[0,0,:])) | |||

| # raise ValueError(labels.shape) | |||

| image_paths = [] | |||

| label_paths = [] | |||

| for i, slc_idx in enumerate(slice): | |||

| # Saving Image Slices | |||

| image_array = data[:, :, slc_idx] | |||

| # Resize the array to 512x512 | |||

| resized_image_array = cv2.resize(image_array, (512, 512)) | |||

| min_val = resized_image_array.min() | |||

| max_val = resized_image_array.max() | |||

| normalized_image_array = ((resized_image_array - min_val) / (max_val - min_val) * 255).astype(np.uint8) | |||

| image_paths.append(f"slice_{i}_{idx}.npy") | |||

| if normalized_image_array.max()>0: | |||

| np.save(os.path.join(save_dir, image_paths[-1]), normalized_image_array) | |||

| # Saving Corresponding Label Slices | |||

| label_array = labels[:, :, slc_idx] | |||

| # Resize the array to 512x512 | |||

| resized_label_array = cv2.resize(label_array, (512, 512)) | |||

| min_val = resized_label_array.min() | |||

| max_val = resized_label_array.max() | |||

| # raise ValueError(np.unique(resized_label_array)) | |||

| # normalized_label_array = ((resized_label_array - min_val) / (max_val - min_val) * 255).astype(np.uint8) | |||

| label_paths.append(f"label_{i}_{idx}.npy") | |||

| np.save(os.path.join(labels_save_dir, label_paths[-1]), resized_label_array) | |||

| return data | |||

| def collate_fn(self, data): | |||

| return data | |||

| def __len__(self): | |||

| return len(self.images_path) | |||

| if __name__ == '__main__': | |||

| model_type = 'vit_b' | |||

| batch_size = 4 | |||

| num_workers = 4 | |||

| slice_per_image = 1 | |||

| dataset = PanDataset('../../Data/AbdomenCT-1K/numpy/images', '../../Data/AbdomenCT-1K/numpy/labels', | |||

| slice_per_image=slice_per_image) | |||

| # x, y = dataset[7] | |||

| # # print(x.shape, y.shape) | |||

| dataloader = DataLoader(dataset, batch_size=batch_size, collate_fn=dataset.collate_fn, shuffle=True, drop_last=False, num_workers=num_workers) | |||

| now = time() | |||

| for data in dataloader: | |||

| # pass | |||

| # print(images.shape, labels.shape) | |||

| continue | |||